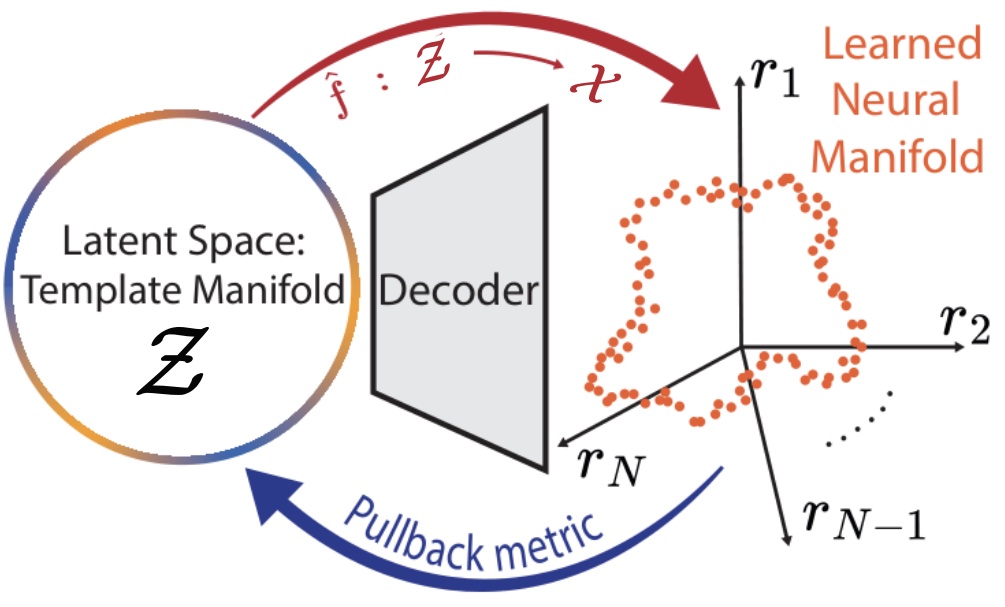

Our deep generative approach to the learn the curvature of neural manifolds.

Neuro-Geometry

How does our brain structure information? Can we represent the brain activity through geometric modeling?

We explore the geometric representations of cognition, such as:

-

neural manifolds, i.e. the smooth spaces of neuronal activity found in neuronal circuits such as head direction circuits, grid cells, and place cells,

-

neural representations, i.e. the structures formed by the activations of visual stimuli ("images") in the visual cortex.

Paper Spotlight:

Francisco Acosta, Sophia Sanborn, Khanh Dao Duc, Manu Madhav, and Nina Miolane. "Quantifying Extrinsic Curvature in Neural Manifolds." Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Topology, Algebra and Geometry for Applications. 2023.

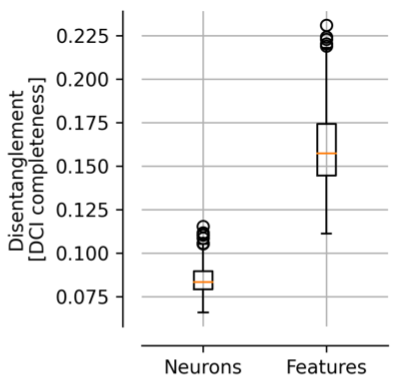

Our approach extracts interpretable ("disentangled") features from the activity of neurons in the inferior temporal (IT) cortex, known to play a critical role for the visual recognition of objects.

Interpretability

Can we read out interpretable concepts organized in our brains? Can we define a coordinate systems that allows us to decode the information stored in neuronal activity?

We break down the complexity of neural systems into simple parts that we can understand, and examine the concepts of:

-

Disentanglement: how neural networks decompose features into elementary components,

-

Superposition: how neural networks can represent more features, i.e. the whole complexity of the outside world, than they have neurons.

Paper Spotlight:

David Klindt, Sophia Sanborn, Francisco Acosta, Frédéric Poitevin, Nina Miolane. "Identifying Interpretable Visual Features in Artificial and Biological Neural Systems." (2023)